The Complete Guide to LLM Evaluation Tools in 2026

Compare LLM Evaluation Platforms, Metrics, and Performance at Enterprise Scale

Look, LLM evaluation tools are everywhere now. Customer support, internal decision-making, content pipelines, code generation. Name a department and there is probably a language model involved somewhere. That part is not news to anyone reading this in 2026.

What catches people off guard is how quickly things fall apart when you scale these systems without checking whether they actually work properly. Models hallucinate. They pick up weird biases from training data. They drift from whatever business goal you originally designed them for. And none of that shows up in a demo. It shows up three months into production, usually at the worst possible time.

Old-school LLM evaluation just does not cut it anymore. Running a few test prompts and eyeballing the results? That worked when LLMs were a novelty. Now they are infrastructure. The LLM evaluation approach you need in 2026 has to go deep on performance analysis, slot into whatever AI pipeline your team already runs, and handle testing at a scale that would make a human review team quit on the spot.

The Cost of Inadequate LLM Evaluation Tools

Still not convinced this is worth worrying about? These real-world disasters might change your mind.

Remember when CNET started publishing finance articles written by AI? The errors were bad enough, but the real damage was the public correction cycle that followed. Readers lost trust, and that trust took months to rebuild. All because nobody caught the mistakes before they went live. [1]

Apple ran into something similar in January 2025. Their AI news summary feature started generating misleading headlines and, even worse, completely made-up alerts. Major news organizations were not happy about it. Press freedom groups piled on. Apple had to suspend the whole thing. [2]

And then there is the Air Canada chatbot situation from 2024. A customer got totally wrong refund information from the airline’s automated system. When it went to a tribunal, the ruling sent shockwaves through the industry: companies are responsible for what their bots say. Full stop. You cannot just slap a disclaimer on it and walk away. That precedent is still influencing how AI liability cases play out today. [3]

These are not cautionary tales from some obscure startup. These are household names that got burned. The lesson is simple but uncomfortable: skipping proper LLM evaluation is not just a technical oversight. It is a business risk that can cost you money, trigger regulatory action, and leave a stain on your reputation that no PR campaign can fully wash out.

How to Choose the Right Evaluation Tool in 2026

So you have decided to take LLM evaluation seriously. Good. Now comes the part where you have to actually pick a tool, which is its own kind of headache. Here is what to pay attention to.

Metrics coverage has to be thorough. Accuracy alone tells you almost nothing useful. You want hallucination rates, bias and fairness measurements, groundedness scores, and factual verification. And you need all of that working across different types of tasks, not just the easy ones.

Developer experience is one of those things that seems minor until it isn’t. If your SDK is clunky, if integration with your existing ML pipeline requires three weeks of custom glue code, your team will resent the tool. Strong documentation and clean APIs save more time than most people realize.

Real-time monitoring is not optional. Not in production. If your LLM evaluation tool only runs in batch mode after the fact, you are going to miss problems when they are still small enough to fix easily. You need something that watches your models while they run.

Usability matters across the whole team. Your ML engineers should not be the only people who can read the dashboards. Product managers, compliance officers, even executives need to pull insights without filing a support request every time.

Think about what happens after the sale. How fast does the vendor respond when something breaks? Is there a community of other users you can learn from? How good is the documentation, really? Not the marketing page version, but the actual getting-things-done version. These details determine whether a tool works for you over the long haul or becomes shelfware.

With those criteria in hand, let us dig into the five platforms that are leading the pack in 2026: Future AGI, Galileo, Arize, MLflow, and Patronus AI.

1. Future AGI

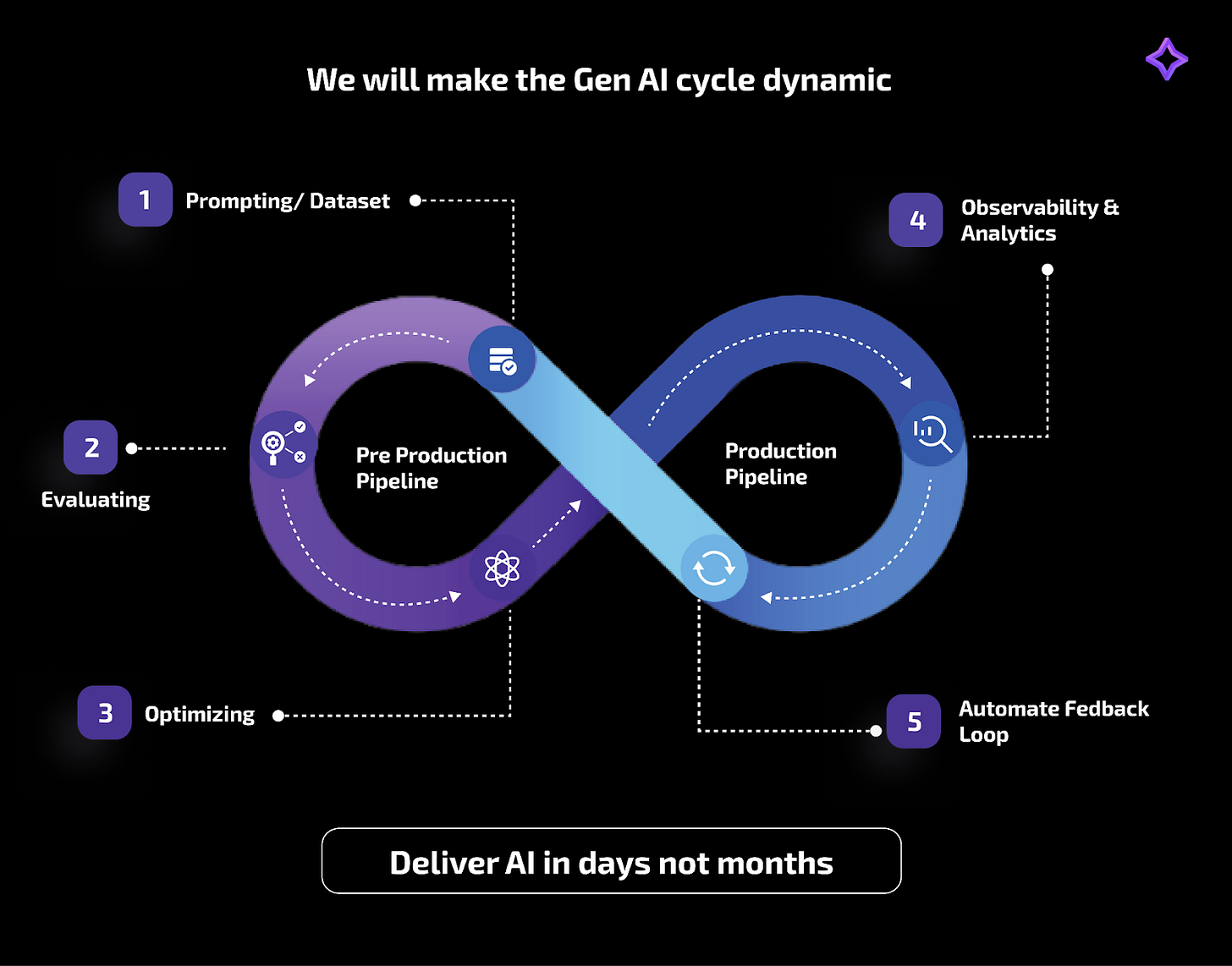

Future AGI built its LLM evaluation framework on a research-first philosophy. It scores model responses on accuracy, relevance, coherence, and compliance. What makes it particularly useful is that you can benchmark performance across different prompting strategies, catch systematic problems before they snowball, and verify that outputs meet both quality benchmarks and regulatory standards.

Core LLM Evaluation Capabilities

Conversational Quality: Two metrics stand out here. Conversation Coherence tracks whether dialogue actually flows in a way that makes logical sense. Conversation Resolution measures whether the model managed to resolve the user’s question by the end of the exchange. Simple concept, surprisingly hard to measure well.

Content Accuracy: The platform flags hallucinations and factual errors by checking whether model outputs stay grounded in the context and instructions they were given. No grounding, no pass.

RAG Metrics: If you are running retrieval-augmented generation, Chunk Utilization and Chunk Attribution show you whether models are actually using the knowledge chunks they retrieve or just ignoring them. Context Relevance and Context Sufficiency go further and check whether the retrieval step pulled in enough of the right information in the first place.

Generative Quality: Translation evaluations verify that meaning and tone survive when content moves between languages. Summary assessments check whether summaries actually capture the important parts of the source material or just skim the surface.

Format and Structure Validation: JSON Validation confirms output formatting is correct. Regex and Pattern Checks plus Email and URL Validation make sure generated text follows whatever structural rules you have defined.

Safety and Compliance Checks: Built-in metrics cover toxicity, hate speech, sexist language, bias, and inappropriate content. On the privacy front, evaluations check compliance with GDPR, HIPAA, and the newer regulations that started rolling out in 2026.

Custom Evaluation Frameworks

Agent as a Judge: This is one of the more interesting features. Multi-step AI agents with chain-of-thought reasoning and tool access evaluate model outputs. Think of it as having a thorough, tireless reviewer that can follow complex logic chains.

Deterministic Eval: Sometimes you need evaluation that produces the exact same result every single time, no wiggle room. That is what this mode delivers. Strict rule-based checking against predefined formats and criteria.

Advanced LLM Evaluation Capabilities

Multimodal Evaluations: Handles text, image, audio, and video inputs. As models get more versatile, this kind of coverage becomes less of a nice-to-have and more of a requirement.

Safety Evaluations: Harmful outputs get intercepted and filtered before they reach production. Proactive, not reactive.

AI Evaluating AI (No Ground Truth Required): This one saves teams a staggering amount of prep work. You can run LLM evaluations without assembling a curated dataset of “correct” answers first. For anyone who has spent weeks building ground truth datasets, that is a big deal.

Real-Time Guardrailing: The Protect feature enforces guardrails on live models while they are serving real users. You can tweak the criteria on the fly as new threats pop up or policies change, which means your compliance posture does not go stale.

Observability: Evaluations run against production outputs as they happen. Hallucinations, toxic content, quality drops. All flagged in real time, not discovered during a weekly review meeting.

Error Localizer: Rather than just telling you “this response has a problem,” it pinpoints the exact segment where the error lives. When you are debugging at scale, that precision is worth its weight in gold.

Reason Generation: Every evaluation score comes with a structured explanation of why the model got that score. No guesswork, no black boxes.

Deployment, Integration, and Usability

Configuration: Installation goes through standard package managers. The documentation is step-by-step and genuinely helpful, not the kind where you still have to Google half the steps afterward.

User Interface: Clean design that works for people across the technical spectrum. Your senior ML engineer and your product lead can both find what they need without a tutorial every time.

Integration: Works with Vertex AI, LangChain, Mistral AI, and other major platforms in the ecosystem.

Performance and Scalability

High-Throughput Processing: Built for massive parallel processing. Enterprise-scale workloads will not choke it.

Configurable Processing Parameters: You get granular control over concurrency settings, so you can tune evaluation performance to match your infrastructure.

Customer Success and Community Engagement

Proven Performance: Early adopter teams have reported hitting up to 99% accuracy and cutting their iteration cycles by 10x. Those are the kind of numbers that get procurement’s attention. [4] [5]

Vendor Support: The support team actually knows the product and responds quickly. That sounds like a low bar, but anyone who has dealt with enterprise software support knows it is not.

Active Community: A dedicated Slack community where users swap tips, troubleshoot issues together, and share evaluation strategies.

Documentation and Tutorials: Guides, cookbooks, case studies, blog posts, and video tutorials. The onboarding library is substantial.

Educational Programs: Regular webinars and a podcast focused on LLM evaluation best practices. Good for keeping your team sharp.

User Reputation: People who use it tend to speak well of it. Reliable, practical, and easy to work with are the words that come up most.

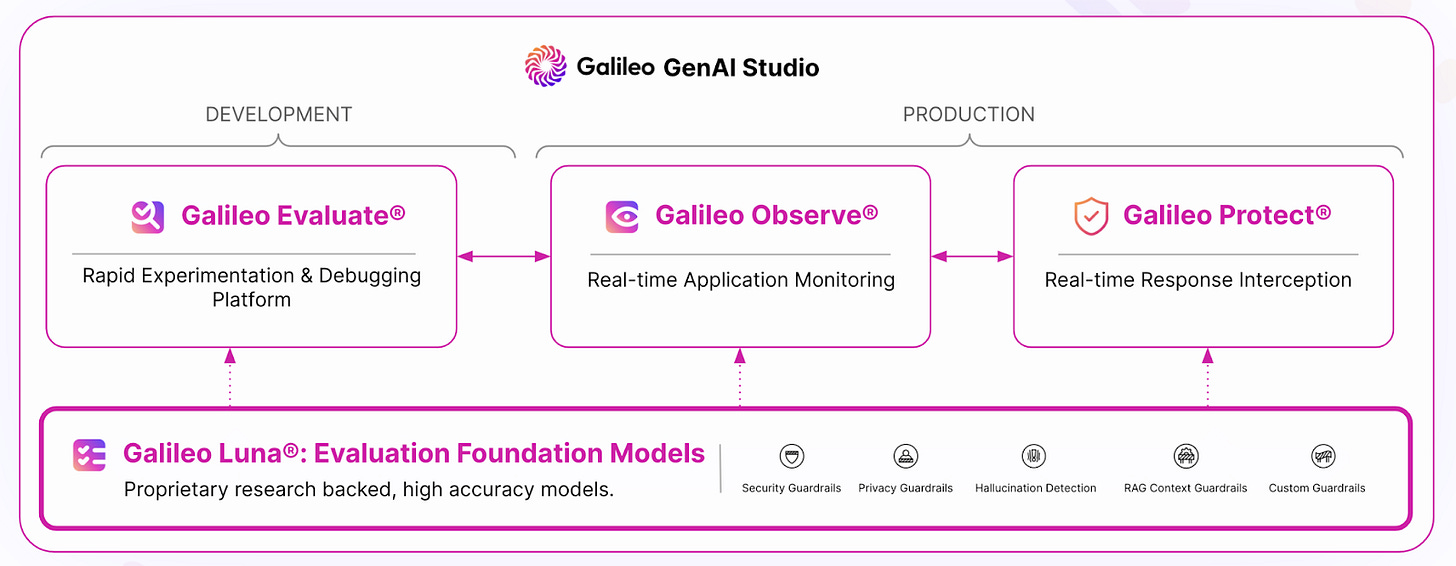

2. Galileo

Galileo Evaluate lives inside Galileo GenAI Studio as a dedicated LLM evaluation module. It is built for teams that want to systematically test LLM outputs for quality, accuracy, and safety before pushing anything into production.

Core LLM Evaluation Capabilities

Broad Assessment Range: Evaluations span factual correctness, content relevance, and how well the model sticks to safety protocols.

Custom LLM Evaluation Frameworks

Custom Metrics: Developers can define and register evaluation metrics that fit their specific use case. If the built-in metrics do not cover what you need, you can build your own.

Guardrails: You can configure guardrail metrics around toxicity and bias parameters, so those issues get caught automatically.

Advanced LLM Evaluation Capabilities

Optimization Techniques: The platform offers guidance on fine-tuning prompt-based and RAG applications. Not just LLM evaluation, but pointers on how to actually improve.

Safety and Compliance: Continuous monitoring runs in the background, flagging potentially harmful or non-compliant content as it surfaces.

Deployment, Integration, and Usability

Installation: Standard package managers, comprehensive quickstart guides. Getting up and running is not a multi-day affair.

User Interface: The dashboards and configuration tools are approachable enough that both technical and non-technical folks can use them without friction.

Performance and Scalability

Enterprise-Scale Processing: Designed for high-volume LLM evaluation workloads from the ground up.

Configurable Performance: Optimization options let you adjust for different throughput requirements depending on your situation.

Customer Impact and Community

Documented Improvements: Case studies show measurable gains in evaluation speed and overall efficiency.

Documentation: Implementation guidance is comprehensive and well-organized.

Vendor Support: The team is knowledgeable and tends to respond promptly.

Module-Specific Resources: Learning materials organized by functionality, so you are not hunting through a giant knowledge base for the one thing you need.

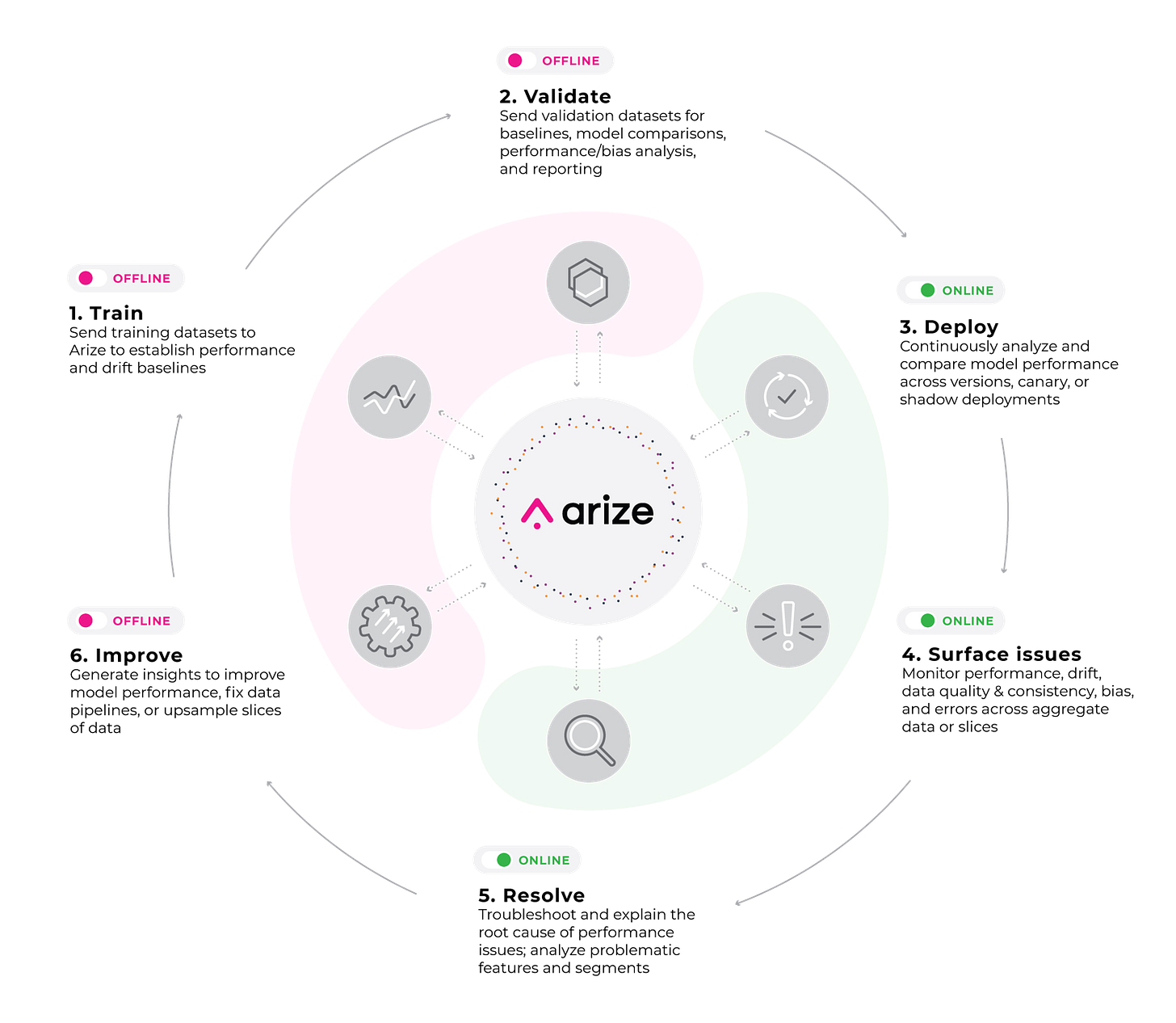

3. Arize

Arize carved out its niche as an enterprise observability and evaluation platform. Its specialty is continuous performance monitoring and model improvement, with particular strength in model tracing, drift detection, and bias analysis. The dynamic dashboards give you real-time, granular visibility that goes deeper than most competing platforms.

Core LLM Evaluation Capabilities

Specialized Evaluators: Ships with HallucinationEvaluator, QAEvaluator, and RelevanceEvaluator out of the box. Purpose-built, not generic.

RAG Evaluation Support: Dedicated features for evaluating retrieval-augmented generation systems. Not an afterthought bolted on later.

Custom LLM Evaluation Frameworks

LLM as a Judge: Supports the LLM-as-a-Judge approach, which opens up both fully automated and human-in-the-loop evaluation workflows.

Advanced LLM Evaluation Capabilities

Multimodal Support: Evaluates text, images, and audio data types.

Deployment, Integration, and Usability

Installation: A pip install and some configuration, with solid documentation to guide the rest.

Integration: Plays well with LangChain, LlamaIndex, Azure OpenAI, Vertex AI, and several other major platforms.

User Interface: Phoenix UI presents performance data clearly. It strikes a good balance between detail and readability.

Performance and Scalability

Asynchronous Logging: Non-blocking logging mechanisms keep overhead low and latency minimal. In production, that matters more than you might think.

Performance Optimization: Configurable timeouts and concurrency settings so you can balance speed against accuracy for your specific workload.

Customer Success and Community Engagement

End-to-End Support: AI engineers can manage the entire model lifecycle, from development through production, without leaving the platform.

Developer Enablement: Educational resources and technical webinars help users build deeper expertise over time.

Community Support: An active Slack community where people collaborate and troubleshoot in real time.

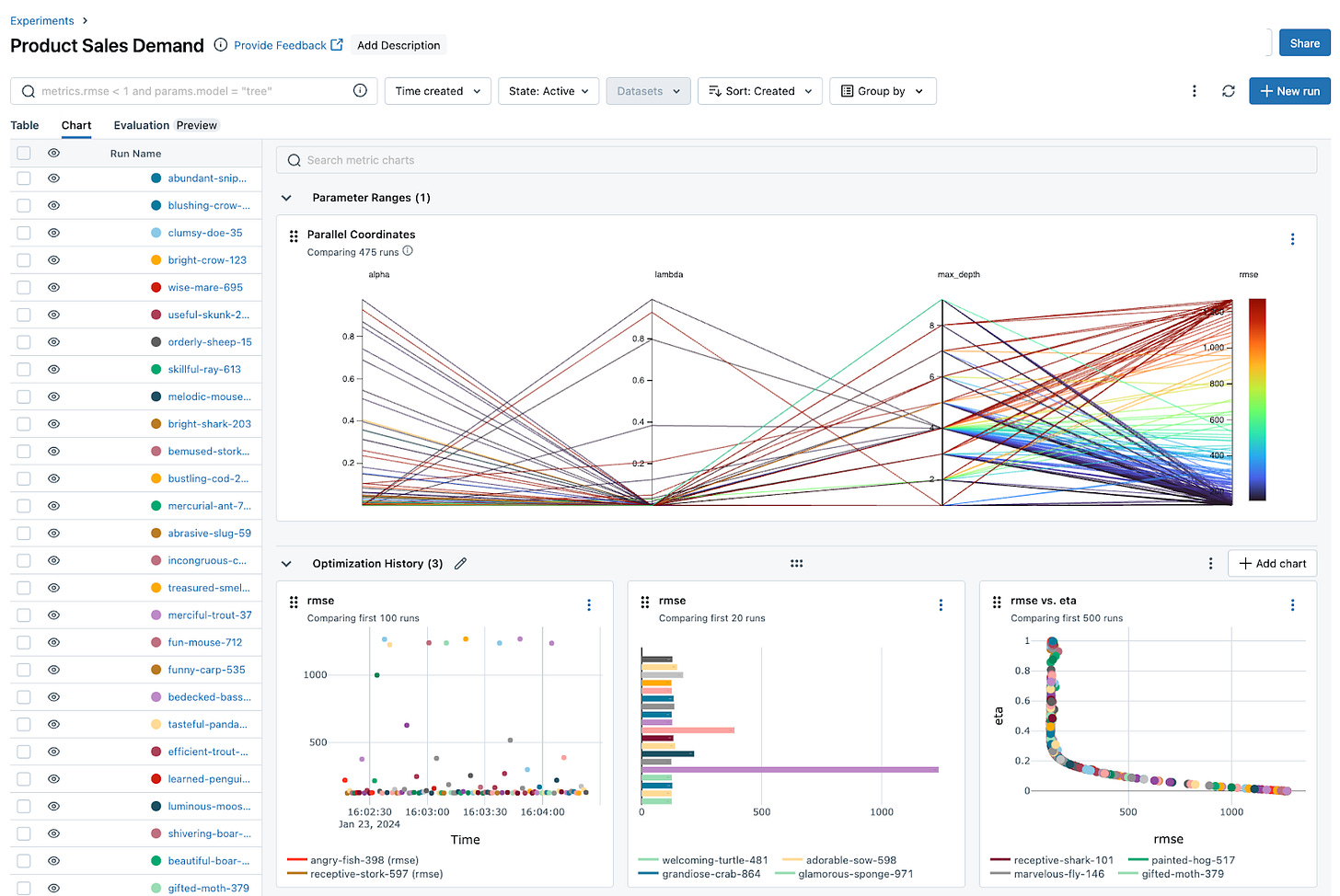

4. MLflow

MLflow has been a staple in the ML tooling world for years now. Originally focused on managing the traditional machine learning lifecycle, it has grown to include solid LLM and GenAI evaluation capabilities. The platform covers experiment tracking, evaluation, and observability in one place.

Core LLM Evaluation Capabilities

RAG Application Support: Built-in metrics for RAG system assessment come standard.

Multi-Metric Tracking: Detailed monitoring that works across both classical ML and GenAI workloads. If your team works in both worlds, that flexibility is a real advantage.

Custom LLM Evaluation Frameworks

LLM-as-a-Judge: You can set up qualitative evaluation workflows where LLMs judge the quality of other models’ outputs.

Advanced LLM Evaluation Capabilities

Multi-Domain Flexibility: Traditional ML, deep learning, generative AI. MLflow works across all of them, which is rare.

User Interface: The evaluation visualization UI is clean and does its job without getting in the way.

Deployment, Integration, and Usability

Managed Cloud Services: Available as a fully managed solution through Amazon SageMaker, Azure ML, and Databricks. No infrastructure headaches if you go that route.

Multiple API Options: Python, REST, R, and Java. Most teams will find their preferred language supported.

Documentation: Tutorials and API references are thorough and well-maintained.

Unified Endpoint: The MLflow AI Gateway gives you one interface to access multiple LLM and ML providers. If you are juggling several providers, this alone can simplify your architecture.

Customer Impact and Community

Cross-Domain Support: Working across traditional ML and generative AI in one platform is a genuine differentiator for teams with diverse workloads.

Open Source Community: Part of the Linux Foundation, with over 14 million monthly downloads. That kind of community size means answers to your questions usually exist somewhere already.

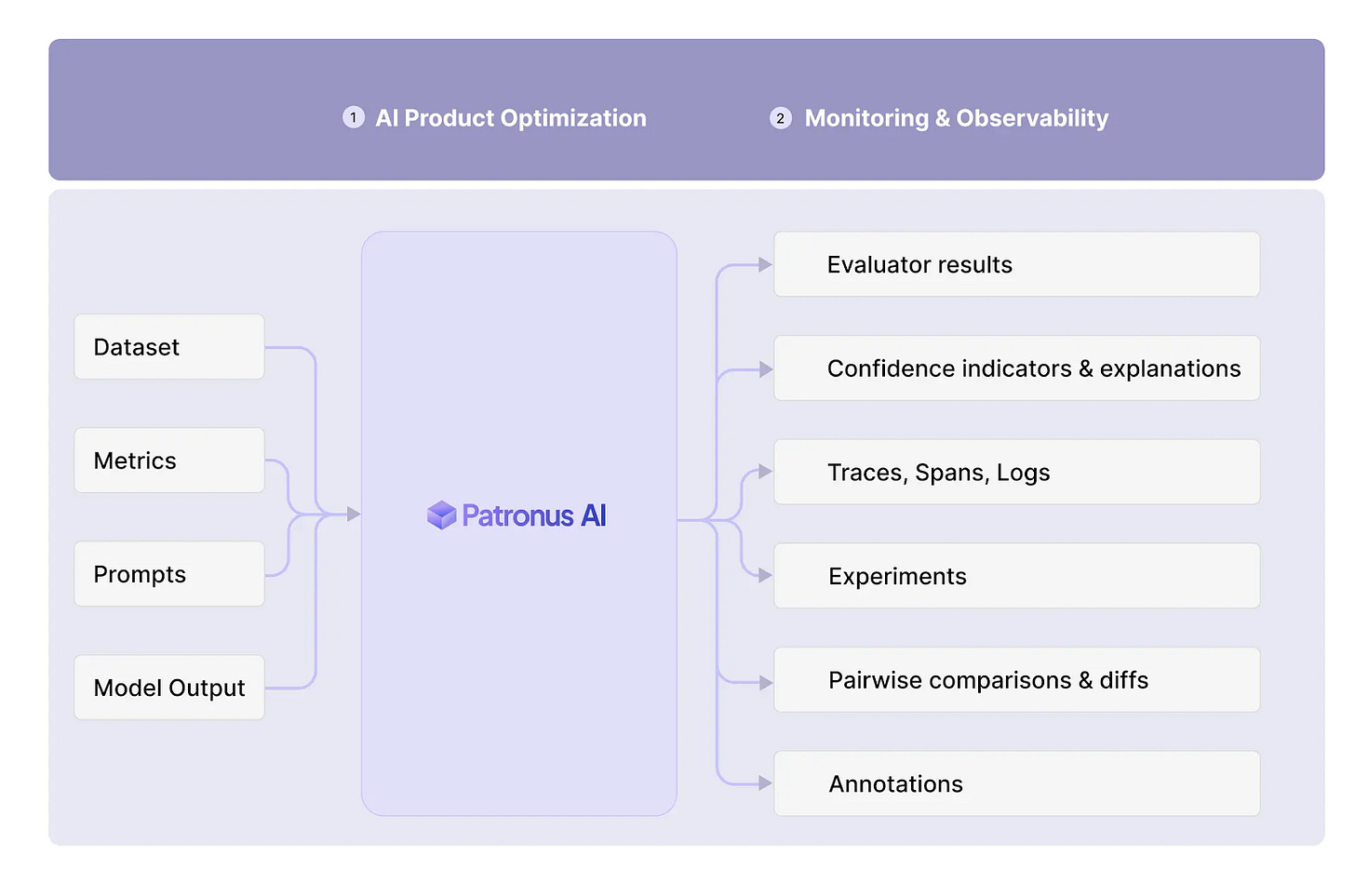

5. Patronus AI

Patronus AI was designed from the start to help teams evaluate and improve their GenAI applications in a structured, repeatable way. It brings a wide toolkit of LLM evaluation capabilities together in one place.

Core LLM Evaluation Capabilities

Hallucination Detection: A fine-tuned evaluator checks whether generated content is actually supported by the input or retrieved context. This is probably the platform’s strongest individual feature.

Rubric-Based Scoring: Likert-style scoring against custom rubrics for things like tone, clarity, relevance, and task completeness. Consistent, repeatable, and customizable.

Safety and Compliance: Built-in evaluators scan for harmful or biased content. Gender bias, age bias, racial bias, and more. All covered out of the box.

Format Validation: Evaluators like is-json, is-code, and is-csv confirm that outputs follow the structural formats they are supposed to.

Conversational Quality: For chatbot use cases, evaluators assess conversation-level behaviors: is the response concise? Polite? Actually helpful?

Custom LLM Evaluation Frameworks

Function-Based Evaluators: Good for straightforward heuristic checks. Schema validation, regex matching, length verification. Simple but effective.

Class-Based Evaluators: When you need more horsepower. Embedding similarity measurements, custom LLM judges, that sort of thing.

LLM Judges: Create your own LLM-powered judges by writing prompts and scoring rubrics. Models like GPT-4o-mini can power these evaluations.

Advanced LLM Evaluation Capabilities

Multimodal Support: Evaluates text and image inputs.

RAG Metrics: Specialized metrics that separate retrieval performance from generation performance. If something is wrong with your RAG pipeline, these metrics help you figure out which half is the problem.

Real-Time Monitoring: Production monitoring through tracing, logging, and alerting. You get visibility into what your models are doing while they are doing it.

Deployment, Integration, and Usability

Installation: SDKs in Python and TypeScript.

User Interface: Clean, uncluttered design. Navigation is straightforward.

Broad Compatibility: Integrates with IBM Watson, MongoDB Atlas, and other tools across the AI stack.

Performance and Scalability

High-Throughput Evaluation: Efficient processing through concurrent API calls and batch processing.

Configurable Processing: Granular control over evaluation behavior so you can scale as your needs grow.

Customer Success and Community Engagement

Vendor Support: Direct support available for clients.

Community Engagement: A MongoDB partnership provides dedicated resources for teams evaluating MongoDB Atlas-based retrieval systems.

Documentation and Tutorials: Quick-start guides and tutorials get new users oriented without too much friction.

User Feedback: Clients report meaningfully better precision in hallucination detection after integrating the platform.

Key Takeaways

Future AGI: The widest multimodal evaluation coverage of any platform reviewed. Text, image, audio, and video, all with fully automated assessment that does not need human reviewers or ground truth datasets. The deterministic evaluation capability is unique in this group and adds a consistency layer that production environments genuinely need.

Galileo: A modular platform with built-in guardrails, real-time safety monitoring, and custom metrics. Finds its sweet spot with RAG and agentic workflows.

Arize AI: Enterprise-grade with specialized evaluators for hallucinations, QA, and relevance. The combination of LLM-as-a-Judge, multimodal handling, and RAG evaluation makes it particularly appealing to teams that live and breathe observability.

MLflow: The open-source workhorse. Unified evaluation across traditional ML and GenAI, deep cloud platform integration, and a massive community. If your team already lives in the MLflow ecosystem, the GenAI evaluation capabilities feel like a natural extension.

Patronus AI: Solid across the board. Hallucination detection, custom rubric scoring, safety checks, and structured format validation. The 91% agreement rate with human judgment is a number worth paying attention to.

Conclusion

None of these tools is objectively “the best” in every dimension. They each bring something different to the table, and the right pick depends heavily on what your team actually needs.

MLflow gives you flexibility and open-source freedom with evaluation that spans both ML and GenAI, plus deep integration with the major cloud providers. Arize AI and Patronus AI both deliver enterprise-ready platforms with strong specialized evaluators, scalable infrastructure, and wide ecosystem compatibility. Galileo zeroes in on real-time guardrails and custom metrics, with a particular focus on RAG and agentic workflows.

Future AGI pulls the broadest set of capabilities into one platform. Fully automated multimodal evaluations, continuous optimization, deterministic evaluation, and a low-code approach that keeps things accessible. The performance numbers from early adopters are hard to ignore: up to 99% accuracy and 10x faster iteration cycles. For organizations trying to build AI systems that are both high-performing and trustworthy at scale, that combination of speed and reliability makes a compelling case.

Future AGI can help your organization build high-performing, trustworthy AI systems at scale. Get in touch with us to explore the possibilities.

FAQs: LLM Evaluation Tools

1. What are LLM evaluation tools and why do I need them?

LLM evaluation tools are software platforms that systematically test and monitor AI language models for accuracy, safety, and performance, helping you catch problems like hallucinations and bias before they reach your customers and cause costly reputation damage.

2. How much does it cost if I skip proper LLM evaluation?

The costs can be devastating, from Air Canada’s legal liability for chatbot misinformation to Apple suspending their AI news feature after generating fake headlines, proving that inadequate evaluation leads to financial losses, regulatory issues, and permanent brand damage.

3. What metrics should I look for when choosing an LLM evaluation platform?

Look for comprehensive coverage including hallucination detection, bias measurements, groundedness scores, factual verification, and real-time monitoring capabilities that work across different task types, not just basic accuracy checks.

4. Can I evaluate LLMs without creating ground truth datasets?

Yes, modern platforms like Future AGI now offer AI-evaluating-AI capabilities that run assessments without requiring you to spend weeks building curated datasets of correct answers, which saves massive amounts of preparation time.

5. Which LLM evaluation tool is best for enterprise RAG applications?

Future AGI offers the most complete solution with specialized RAG metrics like Chunk Utilization and Context Relevance, plus multimodal support and deterministic evaluation, though Galileo and Arize also provide strong RAG-focused features.

6. Do evaluation tools work with my existing AI infrastructure?

Most leading platforms integrate with popular frameworks like LangChain, Vertex AI, Azure OpenAI, and LlamaIndex, with Python SDKs and REST APIs that slot into whatever pipeline your team already runs.

References

https://www.theverge.com/2023/1/25/23571082/cnet-ai-written-stories-errors-corrections-red-ventures [2] https://www.bbc.com/news/articles/cq5ggew08eyo [3] https://www.forbes.com/sites/marisagarcia/2024/02/19/what-air-canada-lost-in-remarkable-lying-ai-chatbot-case/ [4] https://futureagi.com/customers/scaling-success-in-edtech-leveraging-genai-and-future-agi-for-better-kpi [5] https://futureagi.com/customers/elevating-sql-accuracy-how-future-agi-streamlined-retail-analytics