MCP vs A2A - The Future of Autonomous Agents

Understand the key differences between MCP and A2A protocols in 2025, and learn when to use each for agentic AI systems, tool access, and collaboration.

Introduction

In 2025, autonomous AI agents can communicate with one another through A2A and connect to various data sources and tools using MCP.

Using autonomous AI agents, complex, multi-agent ecosystems are replacing separate processes.

These days, they manage operations in real time, trading context and status to address more important issues than any one agent could manage. Teams of agents are being sent by companies across multiple fields, including operations and marketing, to automate whole processes free from ongoing human control.

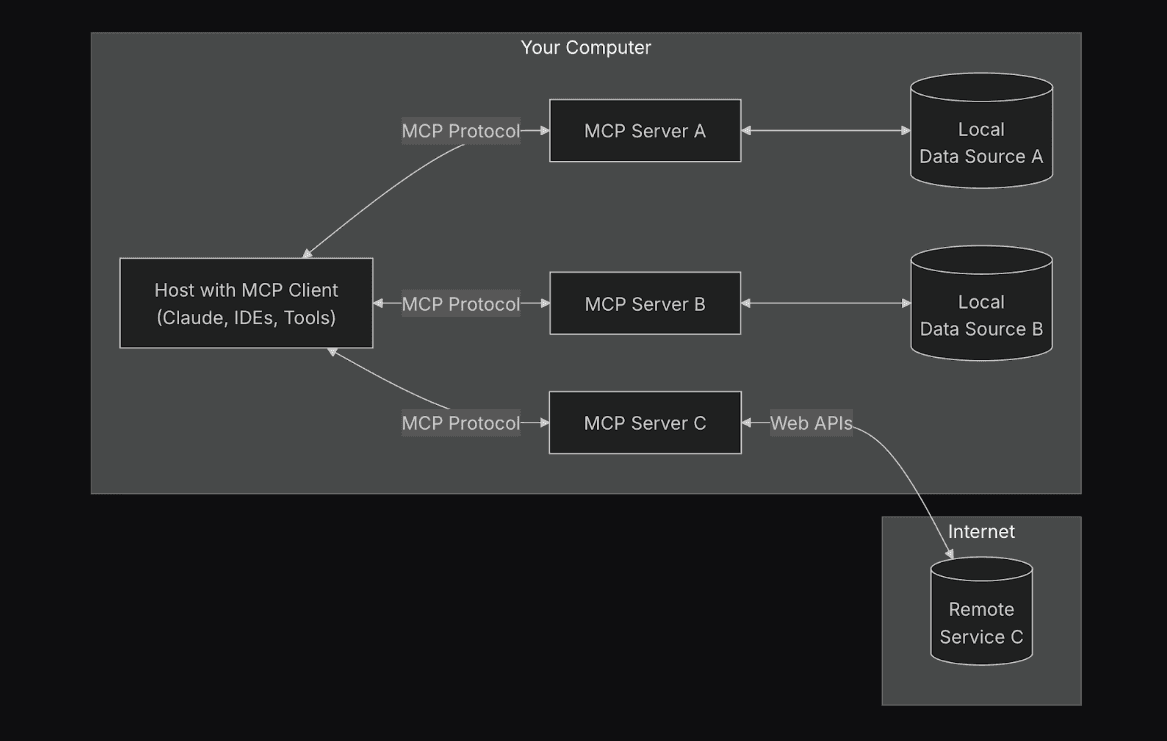

Model Context Protocol provides the final solution for interfacing with databases, APIs, and applications without need for unique coding.

Agent2Agent enables communication, context sharing, and collaboration among agents across many platforms.

MCP - Developed by Anthropic

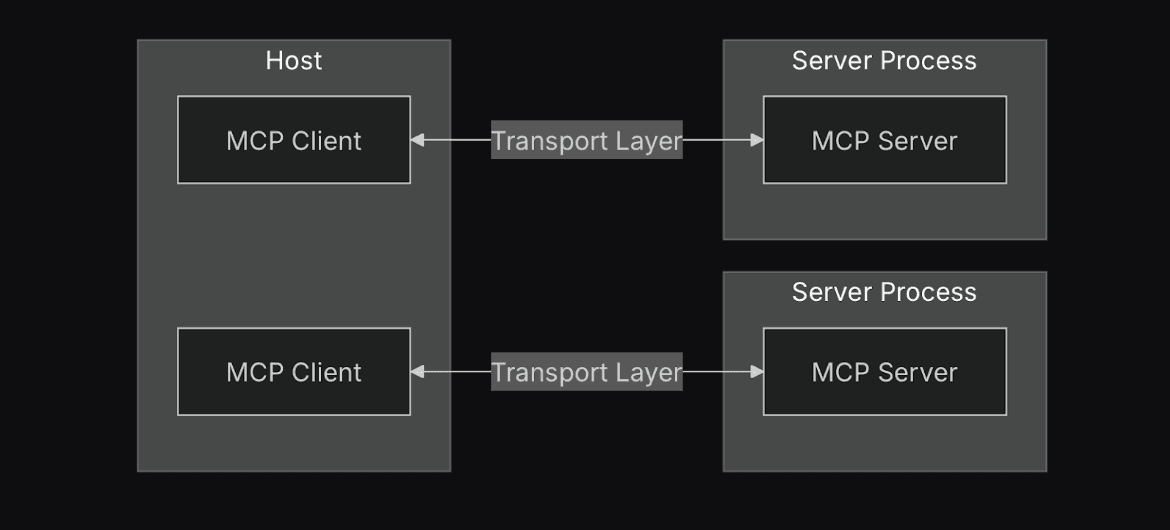

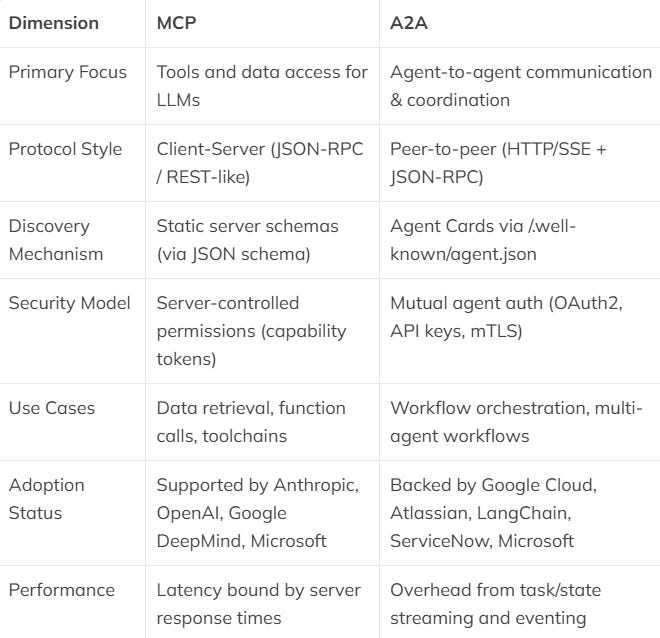

Model Context Protocol (MCP) is a client-server protocol designed by Anthropic to present structured data and tools to LLMs using standardized JSON-RPC and REST-like interfaces. It gives agents a consistent way to find and call methods, which cuts down on the need for special interaction code. MCP uses JSON-RPC 2.0 messages via HTTP (or other transports) to transmit method calls, arguments, and results between LLM-powered clients and external servers.

2.1 Core Components

MCP Server: Hosts endpoints that provide access to data sources, APIs, or toolchains; manages authentication and rate-limiting to ensure secure and regulated access to functionalities.

MCP Client: An application or agent driven by a large language model interacts with the server's JSON schema to negotiate supported methods and calls tool endpoints to enhance model responses.

2.2 Communication Flow

Schema Discovery: Before starting any calls, the client searches the JSON schema of the server - e.g., schema.ts - to find accessible methods, arguments, and return types.

Request Construction: Using JSON-RPC, the client generates a prompt including contextual metadata after specifying the intended method and parameters, so requesting either completion or generation.

Execution and Response: The server executes approved actions (e.g., SQL queries, file reads) which the client then passes to the LLM for additional processing either structured or unstructured.

2.3 Key Features and Extensions

Model-Agnostic: Working at the protocol level instead of with model-specific SDKs, Model-Agnostic works with any LLM API including OpenAI, Anthropic Claude, Google Gemini, and more.

Secure Two-Way Links: Without giving consumers access to API keys, Secure Two-Way Links lets servers control rights and rate limitations. Rather, they control every method call with capable tokens.

SDK Support: Official SDKs for Python, TypeScript, C#, Java, and other languages include high-level abstractions for schema loading, request signing, and transport configuration.

A2A - Developed by Google

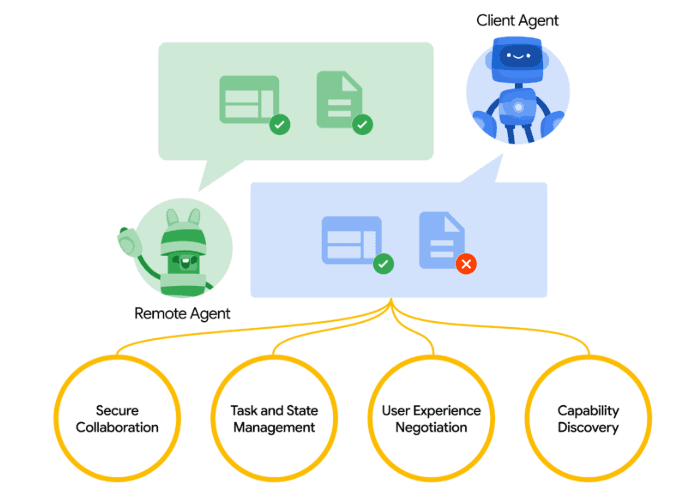

Agent2Agent (A2A) is a peer-to-peer protocol developed by Google which enabling discovery, secure communication, and task management across diverse AI agents. It allows any agent to be a client or server, enabling cross-platform communication.

3.1 Core Primitives

Agent Card: A publicly accessible JSON file (e.g., /.well-known/agent.json) that specifies an agent's name, skills, endpoints, and authentication prerequisites, enabling peers to understand the activities it can do.

Tasks & Messages: A2A defines tasks/send for single tasks, tasks/sendSubscribe for enduring workflows with progress events, along with generic message and artifact structures which enable data interchange and process status coordination.

3.2 Communication Patterns

Discovery: Agents get peer Agent Cards by an HTTP GET request at https://<agent-domain>/.well-known/agent.json, according to the RFC 8615 standards for "well-known" URIs.

Task Negotiation: To get task updates, a client agent sends a task/send JSON-RPC call to a peer and follows status updates over SSE (tasks/sent).

Artifact Exchange: When an agent's work is completed or at a stop, it generates artifacts—organized outputs like JSON payloads or file URIs. Then peers acquire these relics to carry on the process or exhibit the findings.

3.3 Core Design Principles

Framework: Independent: A2A is based on HTTP(S), JSON-RPC 2.0, and SSE thus it can be used with any technology stack without being locked into one provider.

Capability Discovery: Agents use information in the Agent Card to highlight their available skills and negotiating techniques, so facilitating dynamic matching between job requirements and qualified colleagues.

Security & Auth: The protocol supports OAuth2, API keys, and mutual TLS (mTLS) for reciprocal authentication in addition to scoped tokens that limit each agent's capabilities method-specifically.

Side-by-Side Comparison Matrix

MCP vs A2A: When to Use What

Use MCP When

Requires Tight Control and Audit Trails: MCP is ideal in environments where traceability, control, and tracking are extremely critical. Every action an AI agent does is logged, allowing simple choice tracking and evaluation. For industries like banking or healthcare where responsibility is non-negotiable, MCP is therefore very appropriate.

Needs Dynamic Tool Selection or Compliance Checks: MCP excels in scenarios requiring real-time tool selection or the enforcement of compliance protocols. A legal technology platform enables an agent to select the appropriate compliance checker or contract template based on local regulations. MCP lets agents dynamically call the right tool for the task, ensuring legal and business compliance.

Needs Single-Agent Decision-Making with Strong Memory: MCP's context management shines if a job calls for one agent to oversee a multi-step process while remembering context—like a customer support case spanning several interactions. It records past details (preferences, past problems, resolutions) so the agent maintains continuity and never loses important information.

Use A2A When

Involves Multiple Agents from Different Vendors or Platforms: A2A excels when you need specialized agents from different vendors to interact naturally. A2A lets them share data and coordinate across platforms; in enterprise IT, one agent might handle helpdesk tickets, another tracks incidents, and a third monitors network health.

Requires Coordination Between Specialized Agents: A2A uses structured communications and task orchestration to let agents know their roles and time when working on distinct parts of a task, such as inventory tracking, shipping logistics, and demand forecasting in supply chain management. These agents exchange communications and artifacts to ensure that their operations are uninterrupted.

Involves Long or Multi-Step Tasks: A2A supports long-running tasks with real-time updates via Server-Sent Events and artifact exchange for handoffs for processes spanning hours or days—like a product development pipeline where market research, prototyping, and testing are handled by different agents. This ongoing communication ensures that every agent picks up exactly where the last left off, so preserving progress visibility all through the lifetimes.

Popular MCP Servers

These pre-built MCP servers can help you test and launch quickly if you need to:

DataWorks: Provides dataset exploration and cloud resource management via MCP by letting AI access the DataWorks Open API.

Kubernetes with OpenShift: MCP Server interfaces with OpenShift clusters and provides CRUD capabilities for Kubernetes resources.

Langflow- DOC-QA-SERVER: Leveraging fundamental MCP capabilities, uses a Langflow backend to enable document-centric question answering.

Lightdash MCP Server: Lets agents access analytical insights straight from MCP by querying BI dashboards.

Linear MCP Server: Integration of LLMs with project management techniques by a linear MCP server helps to enable automated problem monitoring and changes.

mcp-local-rag: Beneficial for privacy-centric processes, mcp-local-rag performs a local RAG-style search without using external APIs.

Conclusion

Together, MCP and A2A create the backbone of next-generation agentic artificial intelligence, allowing both contextual power and cooperative scale. MCP offers organized tool invocation and context management that enables auditability and dynamic tool access, so empowering single-agent processes. A2A provides scalable multi-agent systems across vendors and platforms by means of peer-to-peer discovery and secure messaging.

Strategic adoption of every protocol - or a combined approach - will make all the difference between adaptable, enterprise-grade agentic ecosystems in 2025 and beyond and static AI assistants. By means of both protocols, companies can chain contextual richness with collaborative breadth, so enabling end-to-end automation across finance, healthcare, supply chains, and IT operations.

Future AGI has also introduced its own MCP Server - built to help LLMs run evaluations, manage datasets, apply safety checks, and generate synthetic data using natural language.

To learn more about integrating with Future AGI's MCP server, read the full documentation.

A good read!