Grok 3: Advancing LLM Evaluation and AI Performance

Explore Grok 3’s breakthroughs in AI performance, LLM evaluation, and real-time data access for enhanced AI model accuracy and efficiency.

1. Introduction

Grok 3, the latest artificial intelligence model, is generating significant discussion among AI experts. In particular disciplines, including mathematics, science, and programming, it promises to outperform industry titans including GPT-4 and Google's Gemini. Can it, however, meet its audacious assertions? Let us investigate Grok 3's performance in practical settings and determine if it truly surpasses GPT-4, Claude 3, and others in terms of LLM evaluation.

2. Grok 3

Grok 3 is an advanced AI model made by xAI that does really well in science, math, thinking, and written language. It surpasses notable competitors such as GPT-4o and Claude 3.5 Sonnet, achieving a respectable 1400 ELO on LMArena, a platform that allows users to evaluate the performance of AI. This model is equipped with innovative tools, such as DeepSearch, which enhances research, and Big Brain Mode, which is designed to handle challenging tasks.

It can handle a huge context window with 1 million tokens, which means it can process a lot of text at once. People are concerned about its uncensored approach, which provides a significant amount of freedom but also incites safety discussions. xAI is showing initiative in keeping it responsible and safe. This AI will change the way people learn to code, do research, and learning. What about it really interests you?

This is a brief overview:

Performs highly in science, computing, math, and reasoning assignments.

On LMArena, the score is 1400 ELO, surpassing GPT-4o and Claude 3.5 Sonnet.

Deep Search is used for conducting in-depth research, while Big Brain Mode is used for taking on significant challenges.

Manages the processing of 1 million tokens simultaneously for the purpose of large-scale text processing.

The uncensored approach sparks controversy, but safety measures exist.

Fantastic for students, researchers, and developers.

Let’s deep dive into the technical foundation and other unique features.

3. Grok 3’s Technical Foundation

The technological foundations of Grok 3 are based on its training, which utilises the Colossus supercluster, an extensive network of 100,000 NVIDIA H100 GPUs that provide over 200 million GPU hours of processing power—tenfold that of Grok 2. This scale efficiently handles challenging tasks at remarkable rates and facilitates parallel training.

Architecture: The computational size indicates it could be a transformer-based model or an ensemble of experts, while specific specifics, like parameter count, remain undisclosed. The model probably has hundreds of billions of parameters, based on its training on the Colossus supercluster, which is ten times more powerful than previous top models.

Context Window: Grok 3 excels in long-context tasks like LOFT (128k) owing to its 1 million token context window, which is eightfold larger than earlier models. Benchmark comparisons provide evidence of this. This allows for the effective management of intricate questions, multi-turn dialogues, and extensive documents. Sophisticated attention mechanisms manage this, potentially using sparse attention or other enhancements to handle such a large-scale task. The situation has ramifications for tasks requiring extensive contextual knowledge, such as interpreting legal documents or conducting comprehensive research studies.

Grok 3 was trained on the Colossus supercluster, utilising over 100,000 Nvidia H100 GPUs to achieve unprecedented scale. It employs reinforcement learning (RL) to an unparalleled degree to improve reasoning, a procedure that entails iterative feedback to augment precision in intricate tasks.

4. Why is it unique?

Real-Time Data Access via X and Web

Grok 3 sets itself apart by incorporating real-time data access into its functionalities. Through its agent, Grok 3 can retrieve real-time data from the internet and has an inherent connection to X. Individuals may enquire, "What are the reactions of X users to the launch of Grok 3?" and receive responses informed by prevailing sentiments and current trends. Grok keeps himself informed by continuously accessing news, stock prices, and social media trends. Grok is a hybrid of a language model and a search engine. It guarantees that its findings are based on the most recent facts.

Think Mode (Chain-of-Thought Transparency)

Grok 3 uses a "Think" mode to demonstrate its internal reasoning as it works through difficulties. Turn it on, and it offers the best answer after showing pseudo-code based on a series of intermediary reasoning mechanisms. This openness provides insightful analysis of the way the model approaches and resolves quite difficult problems. This function benefits both users and developers by enabling them to fix problems interactively and view decision-making processes more precisely.

Big Brain Mode (Dynamic Compute Allocation)

Grok 3 designed the Big Brain mode to handle tasks requiring more computational power. Grok 3 dynamically allocates extra resources in this mode to guarantee higher accuracy on demanding searches. By means of extra GPU clusters or multiple reasoning techniques, it can extend its computational capacity to cross-check and improve its responses. Although the function ensures fast answers for basic enquiries, more effort is involved in addressing challenging ones.

Grok Agents and Tool Use

Grok 3 is meant to be an intelligent agent interacting with outside tools, not a separate chatbot. Its DeepSearch subsystem can call APIs, search the web, run codes, and include the results in its reasoning process. Its tool-using capacity makes it a complete study assistant, able to do jobs including data synthesis into coherent reports or historical stock price acquisition.

5. Performance and Benchmark Results

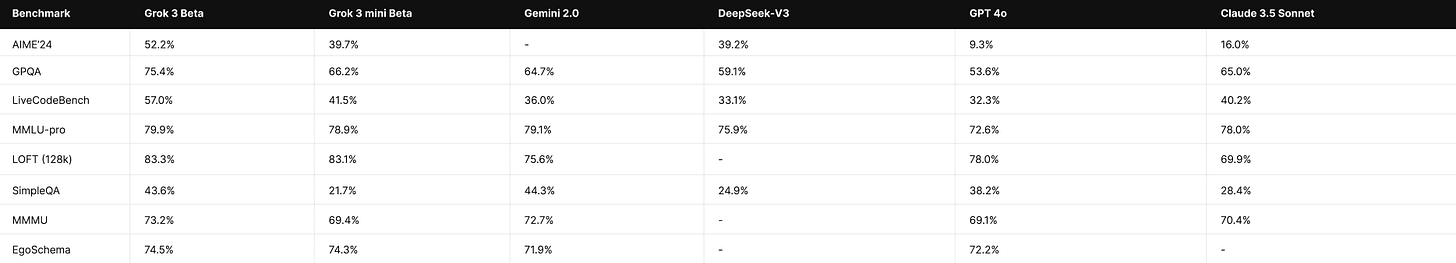

Grok 3 provides quick, high-quality responses when reasoning is disabled. Its ability is compared to that of top models, and the specific results show what it does well:

Chatbot Arena Elo

On the global leaderboard, Grok 3's Elo score of about 1402 in head-to-head comparisons in LMSYS's Chatbot Arena topped both OpenAI's and Anthropic's most advanced models. This score, much above the usual range of 1230–1300 for GPT-4, supports xAI's claim that it shows remarkable performance in both academic benchmarks and real-world user preferences.

Mathematical Reasoning

With a 2025 AIME score of 93.3%, Grok 3 shone in arithmetic and logic tests. Almost perfectly answering math questions from college-level competitions helped to obtain this result. Grok 3 Mini's exceptional 95.8% on AIME 2024 indicates that it has strong reasoning capabilities. Correcting mistakes and investigating possibilities, it can analyse problems in a short time.

Knowledge and QA

On comprehensive knowledge tests like MMLU-Pro, Grok 3 scored about 79.9%—a somewhat higher mark than Gemini 2.0's 79.1% and Claude 3.5's 78.0%. Grok 3 features a built-in search for current references and performs well in 57 topics, including history, physics, and law; therefore, it is more adept at answering knowledge-based enquiries.

Coding and Technical Tasks

Grok 3 outperformed GPT-4 and Claude in these comparisons, achieving 79.4% on xAI's LiveCodeBench (LCB) and 57.0% on a stricter metric. This model was designed to manage coding queries. Also, Grok 3 can code about 1.2 times faster than GPT-4, and it can run code in Think mode to check or improve results, which lowers the number of mistakes in final solutions.

Long-Context Tasks

Grok 3, equipped with a token window of up to 1M tokens, can process extensive documents, achieving a state-of-the-art accuracy of approximately 83.3% on the LOFT 128k benchmark. It is capable of reading and synthesising information from hundreds of pages, which renders it highly effective for complex retrieval and summarisation tasks.

6. How to Actually Use Grok 3?

Grok 3 is currently unavailable via API. It is compatible with an X Premium+ subscription, which is priced at $40 per month. Additionally, the Grok application is available for installation on both iOS and Android platforms. It also comes with web support.

Future AGI provides you with real-time, clear insights into the efficacy of your xAI app. The service provides a user-friendly dashboard that displays critical metrics and alerts, enabling you to promptly identify and resolve any issues. It offers interactive statistics as well as simple tools to enable the monitoring of error trends and usage. This configuration enables you to promptly address any glitches and ensures that your application functions seamlessly.

7. Conclusion

All things considered, the publication of Grok 3 unambiguously signals to the AI community the age of thinking agents we have here. A window on the operation of future artificial intelligence systems is offered by the model's ability to consider phases, confirm its functionality, and remain current in realtime. Looking at it next to Gemini 1.5, Claude 3, and GPT-4, we find several fresh concepts. Whether it's safety, multimodality, scalability, or tool use, every model brings something unique to the objective of making AI more broad and potent.

Grok 3 closely follows GPT-4, Claude 3, and Gemini 1.5, incorporating its unique intelligence into the mix in 2025. Along with its open integration approach, its strong technical talents in thinking and long-context management should open the path for the first wave of AGI systems that are both powerful and readily accessible for many individuals.

8. FAQs

What distinguishes Grok 3 from other frontier models like GPT-4, Claude 3, and Gemini 1.5?

Grok 3 is designed especially for deep thinking with an ultra-long context capability (up to 1M tokens), integrated real-time data access via X, and specific modes (Think and Big Brain) that improve its problem-solving and code execution skills.

How does Grok 3’s extended context window benefit advanced developers?

It is perfect for complicated research or code analysis since it can handle very lengthy documents (up to 1 million tokens) without losing track of previous context, allowing developers to work with large data sources or multi-part discussions.

What is the significance of Grok 3’s “Think” mode?

The model's chain of thought can be seen in "Think" mode, which enables users to observe intermediate reasoning stages and understand the process by which it reaches its ultimate answer. So the model's outputs are more transparent and trustworthy.

What computational resources are required to run Grok 3?

Grok 3 uses the Colossus supercluster, which has 100,000 NVIDIA HIOO GPUs and more than 200 million GPU-hours of computing power, which is a lot more than Grok 2 could have used.

evals are very important, and this is a nice article to learn about it more