Build and Improve RAG Application with LangChain and Observability

Master LangChain RAG: boost Retrieval Augmented Generation with LLM observability. Compare recursive, semantic and Sub-Q retrieval for faster, grounded answers.

1. Introduction

Retrieval-Augmented Generation (RAG) systems face significant challenges when scaling to production environments. While LangChain provides powerful chain-based orchestration with flexible retrieval capabilities, maintaining answer quality and trustworthiness at scale requires proper observability. Without visibility into your system's performance, you cannot identify why answers drift or why relevant chunks get missed.

This guide demonstrates three incremental RAG improvements - recursive chunking, semantic chunking, and Chain-of-Thought retrieval - while implementing continuous quality measurement using Future AGI. This approach ensures you can deploy with confidence and maintain performance over time.

For hands-on implementation, follow our comprehensive cookbook: https://docs.futureagi.com/cookbook/cookbook5/How-to-build-and-incrementally-improve-RAG-applications-in-Langchain

2. Why LangChain RAG Requires Observability

LangChain's modular design creates multiple failure points that are invisible without proper monitoring. Each component - embedding models, chunking strategies, prompt templates - can introduce issues like embedding drift, chunk overlap problems, and prompt leakage. RAG failures are particularly challenging to detect because incorrect answers often appear fluent and well-formatted while citing wrong sources.

LLM observability addresses these blind spots by tracing every component, scoring context relevance, and measuring how well generations are grounded in retrieved content. This visibility enables data-driven debugging and iteration rather than relying on manual inspection.

3. Tech Stack for Production-Ready LangChain RAG

Our production workflow uses the following components:

Installing Dependencies

pip install langchain-core langchain-community langchain-experimental

openai chromadb beautifulsoup4 futureagi-sdk

Step 1: Baseline LangChain RAG with Recursive Splitter

Setting Up the Dataset

import pandas as pd

dataset = pd.read_csv("Ragdata.csv")Our CSV contains Query_Text, Target_Context, and Category columns. Each query is matched against Wikipedia pages covering Transformer, BERT, and GPT topics.

Loading Pages and Splitting Recursively

from langchain_community.document_loaders import WebBaseLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.vectorstores import Chroma

from langchain.chat_models import ChatOpenAI

urls = [

"https://en.wikipedia.org/wiki/Attention_Is_All_You_Need",

"https://en.wikipedia.org/wiki/BERT_(language_model)",

"https://en.wikipedia.org/wiki/Generative_pre-trained_transformer"

]

docs = []

for url in urls:

docs.extend(WebBaseLoader(url).load())

splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

chunks = splitter.split_documents(docs)

vectorstore = Chroma.from_documents(chunks, embedding=embeddings,

persist_directory="chroma_db")

retriever = vectorstore.as_retriever()

llm = ChatOpenAI(model="gpt-4o-mini")Running the Baseline Chain

def openai_llm(question, context):

prompt = f"Question: {question}\n\nContext:\n{context}"

return llm.invoke([{'role': 'user', 'content': prompt}]).content

def rag_chain(question):

docs = retriever.invoke(question)

context = "\n\n".join(d.page_content for d in docs)

return openai_llm(question, context)The Future AGI instrumentor automatically traces every call, enabling later comparison of metrics across different implementations.

Evaluating the Baseline

We measure performance across three dimensions using Future AGI's evaluation framework:

from fi.evals import ContextRelevance, ContextRetrieval, Groundedness

from fi.evals import TestCase

def evaluate_row(row):

test = TestCase(

input=row['Query_Text'],

context=row['context'],

response=row['response']

)

return evaluator.evaluate(

eval_templates=[ContextRelevance, ContextRetrieval, Groundedness],

inputs=[test]

)These metrics provide quantitative measurement of retrieval performance rather than subjective assessment.

Step 2: Boost Recall with Semantic Chunking

Recursive splitting can break sentences mid-thought, leading to context fragmentation. SemanticChunker groups content by meaning, often improving recall performance.

from langchain_experimental.text_splitter import SemanticChunker

s_chunker = SemanticChunker(embeddings, breakpoint_threshold_type="percentile")

sem_docs = s_chunker.create_documents([d.page_content for d in docs])

vectorstore = Chroma.from_documents(sem_docs, embedding=embeddings,

persist_directory="chroma_db")

retriever = vectorstore.as_retriever()Initial testing showed Context Retrieval scores improving from 0.80 to 0.86.

Step 3: Enhance Groundedness via Chain-of-Thought Retrieval

Complex questions require information from multiple focused passages. This approach breaks queries into sub-questions, retrieves context for each, then synthesizes a comprehensive answer.

from langchain_core.prompts import PromptTemplate

from langchain_core.runnables import RunnableLambda, RunnablePassthrough

subq_prompt = PromptTemplate.from_template(

"Break down this question into 2-3 SUBQ bullet points.\nQuestion: {input}"

)

def parse_subqs(text):

return [line.split("SUBQ:")[1].strip()

for line in text.content.split("\n") if "SUBQ:" in line]

subq_chain = subq_prompt | llm | RunnableLambda(parse_subqs)

qa_prompt = PromptTemplate.from_template(

"Answer using ALL context.\nCONTEXTS:\n{contexts}\n\nQuestion: {input}\nAnswer:"

)

full_chain = (

RunnablePassthrough.assign(subqs=lambda x: subq_chain.invoke(x["input"]))

.assign(contexts=lambda x: "\n\n".join(

doc.page_content

for q in x["subqs"]

for doc in retriever.invoke(q)

))

.assign(answer=qa_prompt | llm)

)This approach achieved the highest Groundedness score at 0.31.

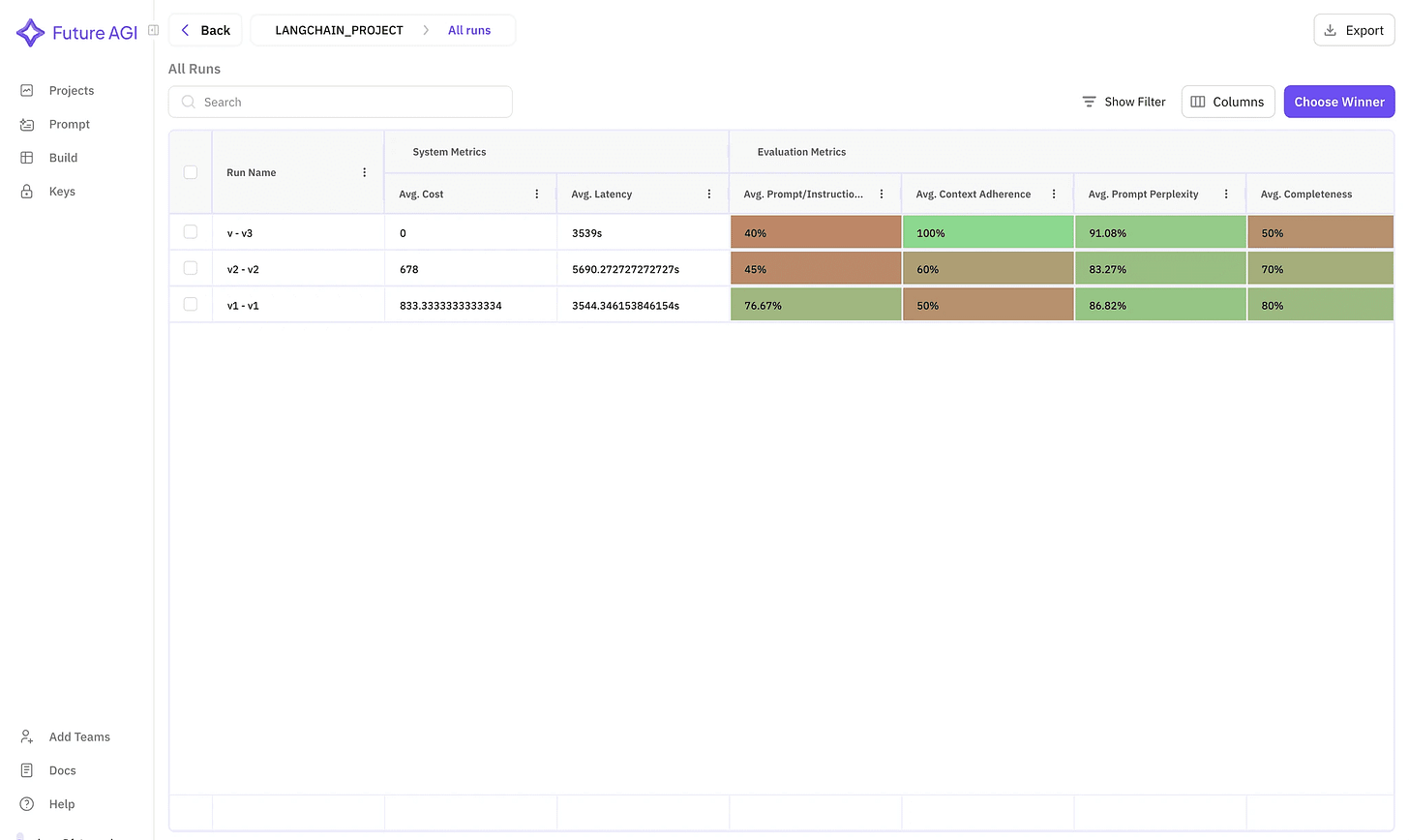

Comparative Evaluation Results

Performance Summary

Key Findings

Chain-of-Thought retrieval delivers superior performance for both retrieval accuracy and answer grounding, making it optimal for complex queries. Semantic chunking provides balanced performance with better token efficiency compared to recursive splitting. Recursive splitting should only be used when latency requirements outweigh accuracy needs, and performance monitoring remains essential to prevent silent degradation.

Production Best Practices

Implement caching for frequently asked sub-questions to reduce token consumption. Optimize chunk size and overlap parameters using real data - start with 1000/200 and iterate based on performance metrics. Monitor for embedding drift by re-embedding new documents weekly to maintain retrieval accuracy. Set up alerting for grounding scores below acceptable thresholds to prevent poor answers from reaching users.

Future Improvements

Consider hybrid approaches that combine semantic chunking with Chain-of-Thought retrieval triggered by query complexity heuristics. Explore domain-specific embedding models for specialized use cases to improve retrieval precision.

Conclusion

Building LangChain RAG systems is straightforward, but maintaining accuracy at scale requires systematic measurement and iteration. Combining retrieval improvements with comprehensive LLM observability through Future AGI enables rapid iteration, early detection of performance degradation, and delivery of trustworthy answers to users.

Ready to improve your LangChain RAG pipeline? Start implementing LLM observability today and experience measurable improvements in your Retrieval-Augmented Generation accuracy - sign up for Future AGI's free trial now!