AI Infrastructure Guide: Scale AI Operations Efficiently

Master AI infrastructure scaling with proven strategies for distributed training, MLOps pipelines, and enterprise-level cost control.

Introduction

AI can transform businesses, but weak infrastructure kills projects before they start. Companies spend millions on advanced models only to find their systems can't handle the workload. Training takes months instead of days, and systems that worked in testing fail under real traffic.

The cost is brutal: cloud bills hit millions while delivering poor results. Studies show 98% of data initiatives fail due to infrastructure problems and runaway costs. The truth is simple: good infrastructure decides if your AI succeeds or fails. Smart choices accelerate your operations; bad ones guarantee expensive disasters.

The Problem Every Developer Faces Today

You might excel at PyTorch or TensorFlow, but coding skills alone won't cut it. Today's developers must think like infrastructure architects to build systems that last.

Most teams hit the same barrier when scaling from experiments to production. Everything slows down, budgets explode, and progress stops. There's a massive gap between coding ability and understanding what AI systems need to run properly. Most people lack the tools to bridge this gap.

Why Enterprises Keep Struggling

Companies hire top AI talent but let outdated infrastructure kill innovation. Managing training across environments becomes complex, requiring smart cost control and workload distribution strategies.

Add data pipelines, model deployments, and monitoring, and you face operational problems that standard tools can't solve.

The Resource Problem: Teams invest heavily in talent but weak foundations slow feature development and delivery.

Multi-Cloud Headaches: Coordinating on-premise, cloud, and edge resources requires careful planning to avoid waste and maintain smooth operations.

Operational Chaos: Tracking data flows, versions, and system health at scale overwhelms basic approaches, causing failures and downtime.

This guide provides practical strategies with real implementation details to build AI infrastructure that scales and performs when it matters.

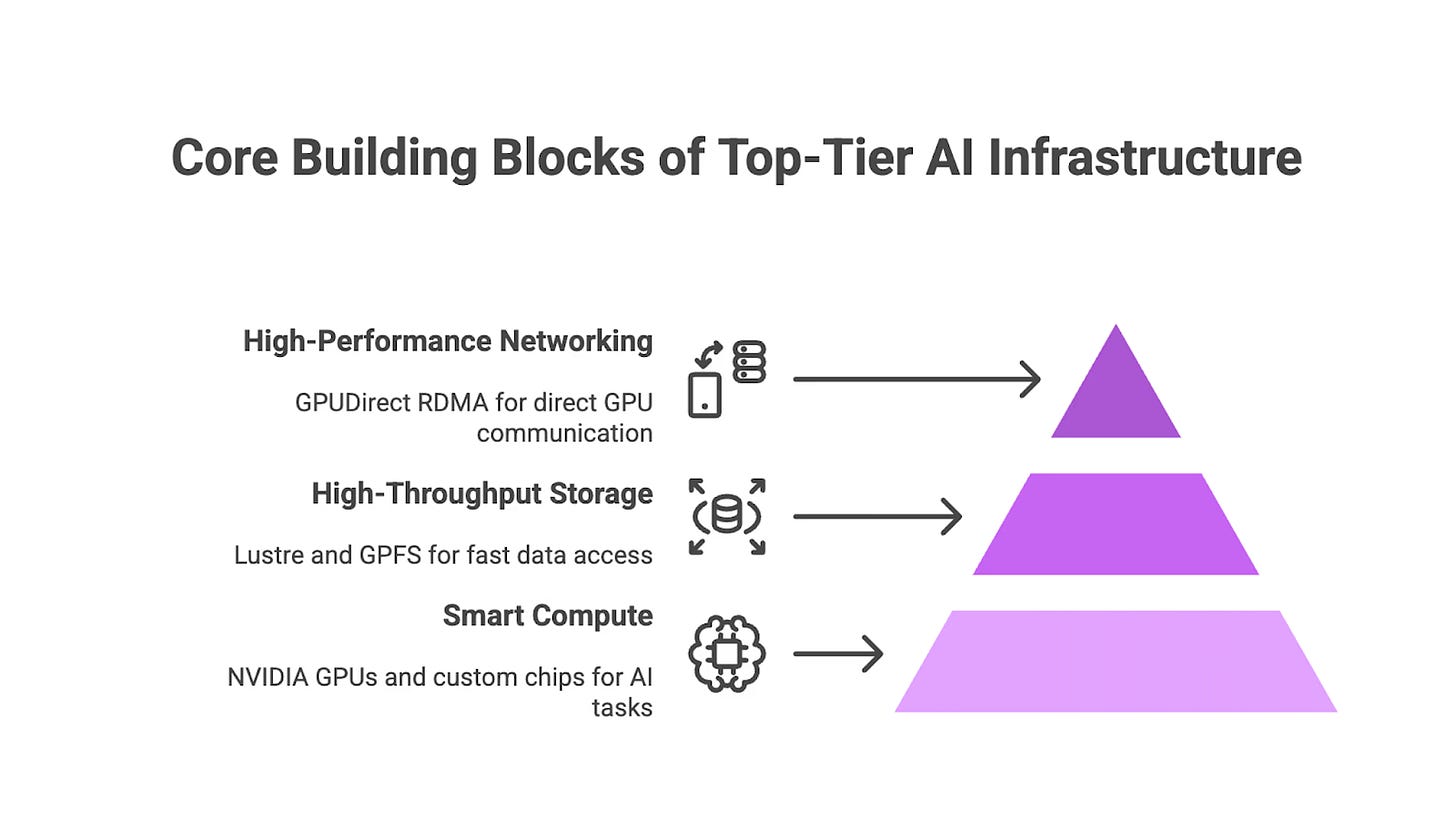

Part 1: The Core Building Blocks of Top-Tier AI Infrastructure

This section focuses on technical details that transform lab setups into production-level AI powerhouses.

1.1 Smart Compute Approaches for Demanding AI Tasks

Hardware choice determines how fast your AI jobs run. NVIDIA's Ampere and Hopper GPUs accelerate training with Tensor Cores that use mixed precision for speed without sacrificing accuracy. They integrate well with other hardware for heavy workloads. Don't overlook CPUs for data preparation, and consider custom chips for specialized processes.

GPU Architecture: Ampere and Hopper GPUs use Tensor Cores for fast matrix operations, handling mixed FP8 and FP16 formats to make transformer training up to 3x faster.

Interconnection: NVLink and NVSwitch enable high-speed data transfer between GPUs, while InfiniBand and RoCE support distributed training with high bandwidth and low latency.

Beyond GPUs: CPUs handle data preparation smoothly, while specialized accelerators like Google's TPUs or AWS's Trainium and Inferentia excel at specific tasks like large-scale inference or training.

1.2 High-Throughput Storage Architecture for the AI Data Lifecycle

Storage can make or break AI pipelines when working with large datasets requiring fast access. Lustre and GPFS enable concurrent data reading during training, while object storage manages long-term data lakes efficiently. Formats like Parquet and TFRecord reduce loading times by eliminating bottlenecks, and strong metadata systems ensure data traceability.

Tiered Storage: Use Lustre or GPFS for fast access to active training data, then rely on scalable object storage for larger archives to balance cost and speed.

Optimized Data Formats: Parquet, TFRecord, and Petastorm reduce I/O delays by enabling quick data conversion for TensorFlow and PyTorch.

Metadata Management: Use catalogs to organize vast datasets, simplify searching, and maintain data lineage for reliable AI results.

1.3 High-Performance Networking for Distributed Systems

Networks hold large AI setups together, so getting them right means faster multi-machine training. Target low-latency, high-throughput fabrics to keep distributed tasks running smoothly. GPUDirect RDMA lets GPUs communicate directly, bypassing unnecessary steps for better performance.

Low-Latency Fabrics: Use high-speed, low-latency options like RoCE for distributed training to maintain smooth data flow.

Direct GPU Communication: GPUDirect RDMA enables GPU-to-GPU communication over networks, eliminating CPU overhead and speeding up distributed tasks.

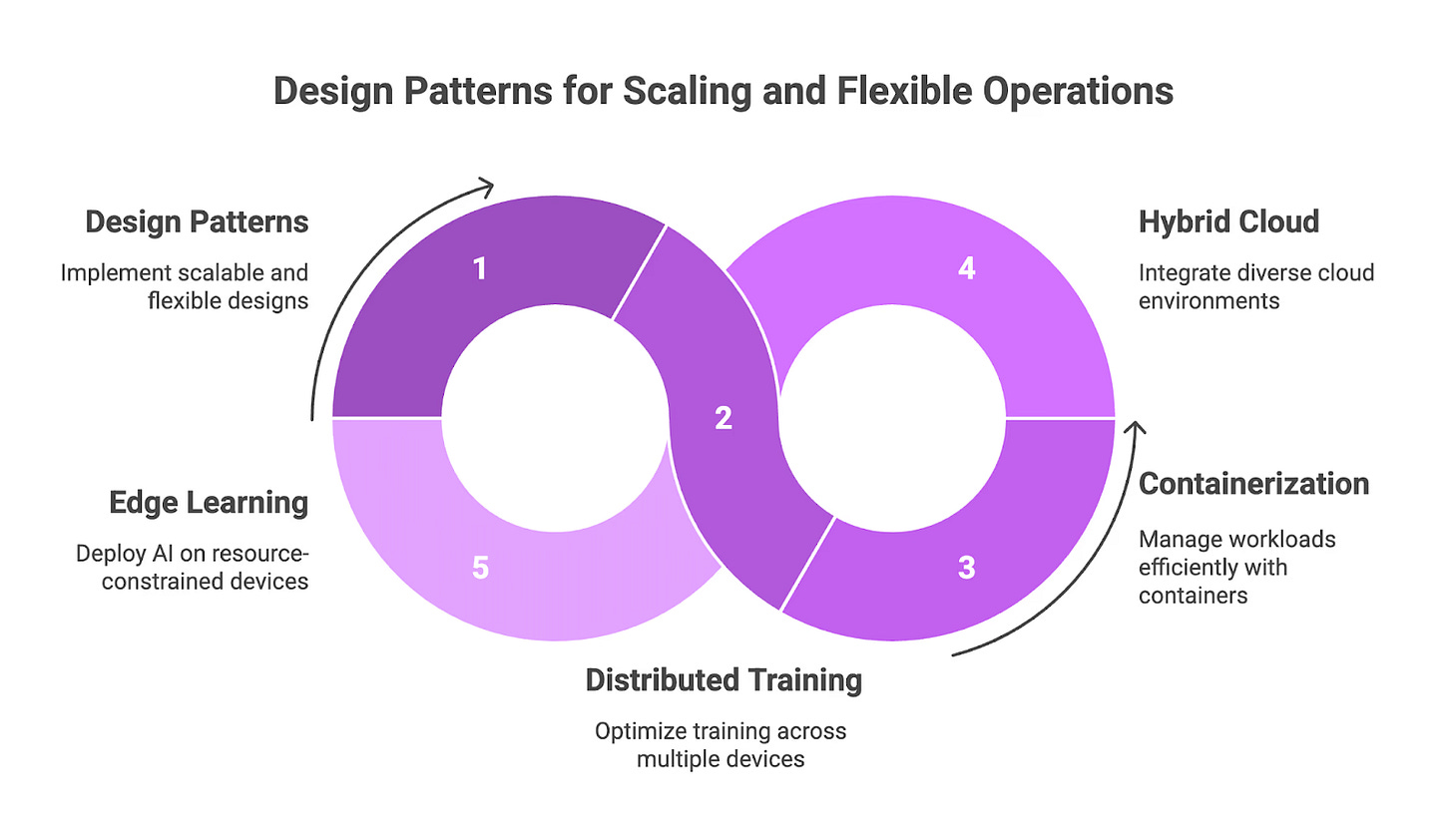

Part 2: Design Patterns for Scaling and Flexible Operations

This section covers design blueprints for AI systems that grow and adapt seamlessly.

2.1 Frameworks and Layouts for Distributed Training

Training massive AI models requires distributing work across machines and GPUs. The best approach depends on your specific needs. Data parallelism works when models fit in single GPU memory but datasets are huge. Model parallelism helps with giant models that won't fit on one device. Pipeline parallelism treats batches like assembly lines to keep all GPUs busy.

Parallelism Strategies: Use data parallelism for large datasets fitting in single GPU memory. Apply model parallelism for oversized models like GPT. Implement pipeline parallelism to reduce idle time by breaking models into sequential steps.

Framework Implementation: PyTorch's DistributedDataParallel delivers strong results with minimal setup. DeepSpeed offers ZeRO optimizations for training large models without memory overload. Horovod typically improves speeds by 10-20% over standard PyTorch setups.

2.2 Scaling with Containers and Orchestration

Containers make AI workloads portable and manageable, but handling GPUs in Kubernetes requires proper tools and planning. The NVIDIA GPU Operator automates driver installation and GPU scheduling. Resource limits and priority classes help prioritize critical training tasks. Different schedulers excel in different scenarios.

NVIDIA GPU Operator: Handles driver deployment and GPU management automatically, using GPU time-sharing and Multi-Instance GPU configurations to maximize hardware utilization.

Scheduler Selection: Choose Kubernetes for dynamic, cloud-native workloads needing auto-scaling and flexible resources. Pick Slurm for large, tightly-coupled training jobs requiring precise control and built-in MPI support.

2.3 Setting Up Hybrid and Multi-Cloud Environments

Multi-cloud AI infrastructure provides flexibility but increases complexity around data movement and cost management. Data gravity creates expensive transfer costs when moving large datasets between services, requiring smart caching and synchronization strategies. Terraform and Ansible help maintain consistency across environments, while FinOps practices control spending.

Managing Data Gravity: Avoid transfer fees that can consume 30% of cloud budgets by using compression, scheduling transfers during off-peak times, and establishing direct connections between regions.

Infrastructure as Code: Terraform manages resource creation across AWS, Azure, and GCP from centralized configurations. Ansible ensures consistent deployments and configurations across all environments.

Cost and Compliance Control: Use tools like OpenCost for unified spending visibility across clouds, establish clear resource usage policies, and automate optimizations for efficiency.

2.4 Architectures for Edge and Federated Learning

Edge AI requires different thinking due to resource constraints and connectivity issues. Techniques like pruning and quantization compress large models for compact devices, while specialized hardware balances power and efficiency. Federated learning enables training on distributed data without centralizing everything.

Edge Optimization: Reduce models through pruning unnecessary connections, apply quantization to lower precision requirements, and use knowledge distillation to create compact versions matching larger model performance.

Hardware Options: NVIDIA Jetson provides GPU power for edge tasks, Google Coral offers TPU capabilities, and frameworks like TensorFlow Lite and ONNX Runtime optimize inference for mobile and embedded devices.

Federated Learning: Train models on distributed devices without centralizing data, sharing only model updates to protect privacy and reduce data transfer.

Part 3: Building Production-Ready MLOps to Automate the Full AI Process

This section outlines technical approaches for operational AI scaling, emphasizing automation, repeatability, and comprehensive monitoring.

3.1 Building Strong Data and Feature Engineering Flows

Feature stores maintain consistency between training and production, letting teams share features without duplication. Automated validation in CI/CD pipelines catches data issues immediately, while data lineage tracking maintains quality throughout the process.

Feature Stores: Use Feast for flexible open-source solutions or Tecton for managed services. Both ensure consistent feature definitions for training and deployment, simplifying team collaboration.

Automated Validation and Lineage: Integrate validation steps that automatically check data patterns, and use tools like Datafold to detect drift and maintain data reliability across pipeline stages.

3.2 Scaling Up Model Testing and Training

Effective large-scale hyperparameter tuning needs smart automation that explores vast parameter spaces without wasting resources. Experiment tracking means logging complete training sessions for reproducible and shareable results.

Intelligent Hyper-parameter Tuning: Combine Ray Tune with Optuna for distributed optimization across clusters, handling complex search spaces with dependent parameters and multi-objective goals.

Experiment Tracking: MLflow manages the complete experiment lifecycle including model registry, while Weights & Biases adds rich visualizations and collaboration features.

3.3 CI/CD Built for Machine Learning

ML pipelines need testing beyond standard software checks, including data validation and model behavior analysis. Smart deployment strategies reduce risk by gradually releasing new models while monitoring performance.

Comprehensive Model Testing: Build test suites covering data validation, unit tests for model components, and behavioral tests checking fairness and robustness across scenarios.

Gradual Deployment: Use canary deployments for controlled releases to small user groups, shadow deployments to test against live traffic without affecting users, and A/B testing for head-to-head model comparisons.

3.4 Fast Model Serving and Inference That Delivers

Different inference types need different infrastructure: real-time APIs versus batch processing systems. Optimization techniques maximize hardware utilization while maintaining fast response times for user-facing applications.

Serving Patterns: Online inference provides real-time responses through REST APIs, batch processing handles large datasets efficiently, and streaming inference manages continuous data flows with consistent low latency.

Inference Optimization: NVIDIA Triton serves multiple models and frameworks simultaneously, using dynamic batching to automatically group requests and maximize GPU utilization without increasing user wait times.

3.5 Deep Monitoring for AI That Actually Works

Production AI systems need monitoring beyond performance metrics to detect model degradation. Performance tracking and explainability tools help teams identify and fix issues quickly.

Drift Detection: Implement automatic detection for data drift (changing input patterns) and concept drift (shifting input-output relationships), using statistical tests and monitoring tools that trigger retraining when needed.

Explain-ability and Performance Tracking: Combine tools like SHAP or LIME for understanding model decisions with performance metrics like latency, throughput, and error rates in dashboards that help teams identify and resolve issues efficiently.

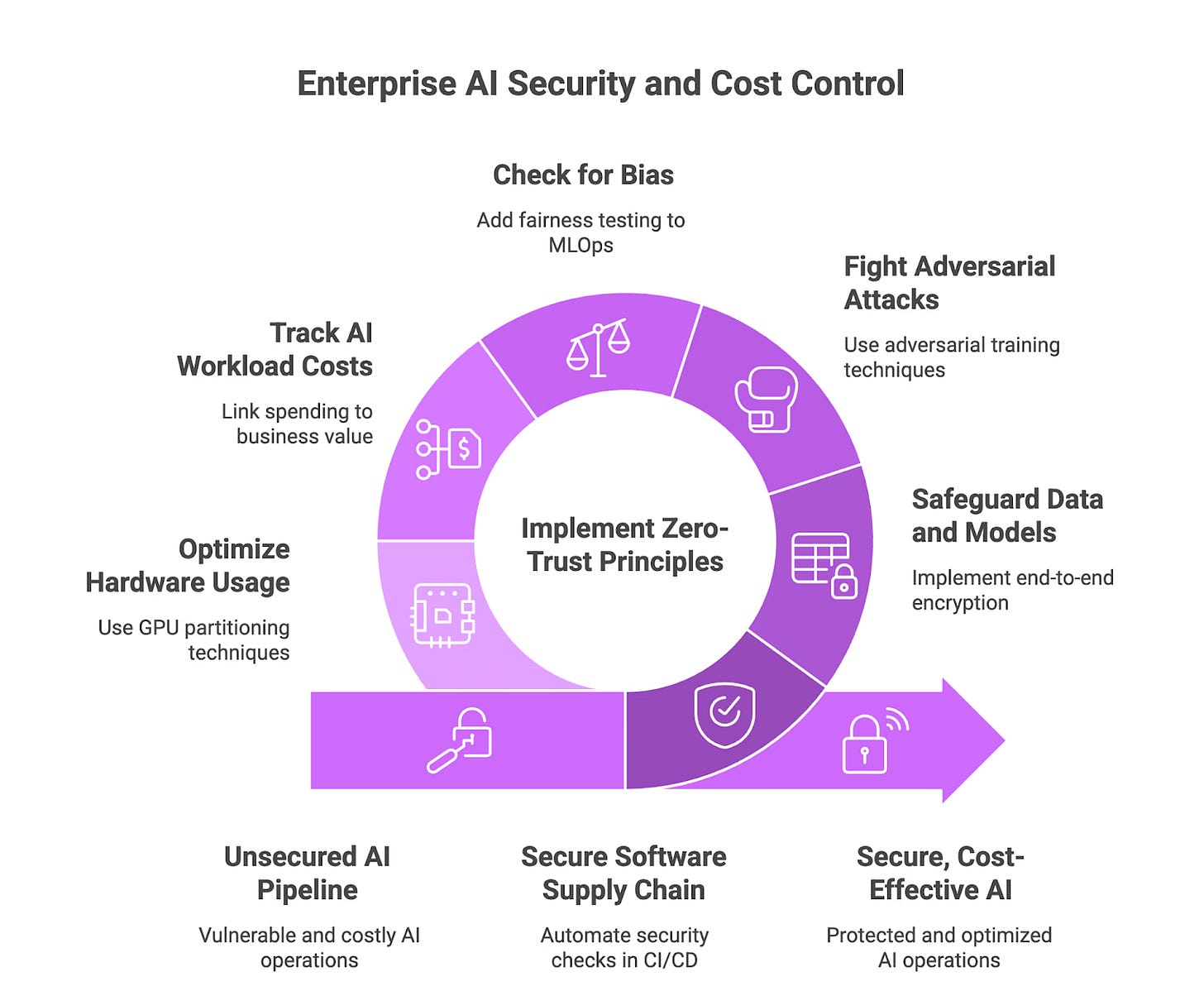

Part 4: Enterprise-Level Security, Governance, and Cost Control

This section covers essential operational requirements for enterprise-scale AI.

4.1 Zero-Trust Security Throughout Your AI Operations

Zero-trust means "never trust, always verify" across your entire AI pipeline, treating every component and access attempt as potentially hostile. Security must be built into every step from development through deployment, not added as an afterthought.

Supply Chain Security: Build automated security scanning into CI/CD pipelines to catch vulnerabilities in container images, third-party libraries, and infrastructure code before production deployment.

Data and Model Protection: Implement end-to-end encryption for data at rest and in transit, and use secure enclaves to create isolated hardware environments for processing sensitive information without exposure risk.

4.2 Specialized Model Security and Building Trust

AI systems face unique threats that can fool models or introduce unfair biases, requiring targeted defenses. These protections ensure models are accurate, reliable, and fair in their decisions.

Adversarial Defense: Common attacks include evasion (modifying inputs to cause misclassification) and data poisoning (corrupting training data). Defend using adversarial training (exposing models to attack examples during training) and defensive distillation (strengthening models by learning from larger model outputs).

Bias and Fairness Testing: Integrate automated fairness testing into MLOps pipelines to identify and correct bias in datasets and model predictions, ensuring fair outcomes across all user groups.

4.3 Smart Financial Management for AI: Keeping Costs Under Control

AI workloads can be expensive, so FinOps practices help track spending and tie it directly to business value. This provides clear visibility into where money goes and how to optimize resource usage.

Cost Attribution and Showback: Implement systems to accurately track AI workload costs and attribute spending to specific teams or projects, often using showback approaches that provide visibility without direct billing.

Resource Optimization: Maximize hardware efficiency through techniques like GPU partitioning. Set up multi-instance GPUs to run multiple smaller jobs on one GPU, and use spot instances for training workloads that can tolerate occasional interruptions.

Conclusion

Building scalable AI infrastructure goes beyond meeting current needs—it's about preparing for the future. The strategies and frameworks in this guide provide a foundation that grows with your organization, handles increased workloads, and incorporates new technologies as they mature. Focus on building flexible systems that enable experimentation while keeping production stable.

Large Language Models and Foundation Models are pushing infrastructure demands to unprecedented levels, requiring massive compute clusters and specialized memory configurations for their growing size and complexity. Meanwhile, AIOps is shifting from reactive problem-solving to proactive system management, using AI to predict and prevent issues before they impact users.

Future AGI enhances AI infrastructure through its Dataset, Prompt, Evaluate, Prototyping, Observability, and Protect modules, streamlining the entire GenAI lifecycle. Explore Future AGI App now to build, scale, and protect your AI systems.

FAQs

Why is a feature store important for production AI?

A feature store keeps training and inference in sync, cuts repeat data work, and lets teams share vetted features quickly.

How can I control multi-cloud costs for AI workloads?

Track spending with a showback model, tag every resource, and use tools such as OpenCost to spot idle GPUs and shut them down before they drain the budget.

What network setup best supports large-scale distributed training?

Combine high-bandwidth fabrics like RoCE or InfiniBand with GPUDirect RDMA so GPUs exchange data directly and avoid slow CPU hops.

How can Future AGI help in AI infrastructure?

Future AGI can watch model behaviour in real time, auto-tune resources, and flag data drift before it hurts accuracy, giving teams a proactive safety nets.

https://open.substack.com/pub/hamtechautomation/p/ai-in-devops-when-to-build-altars?utm_source=app-post-stats-page&r=64j4y5&utm_medium=ios